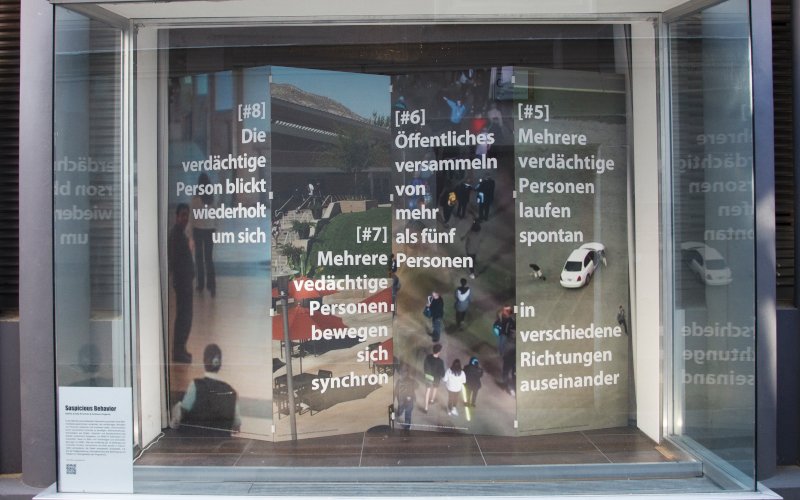

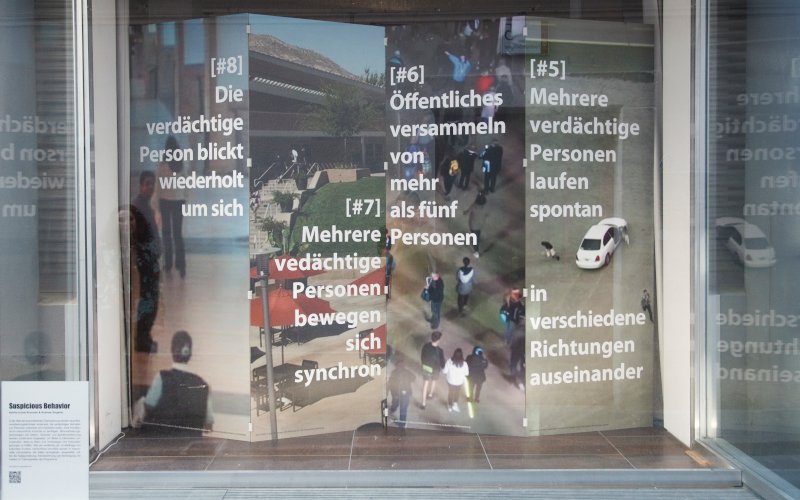

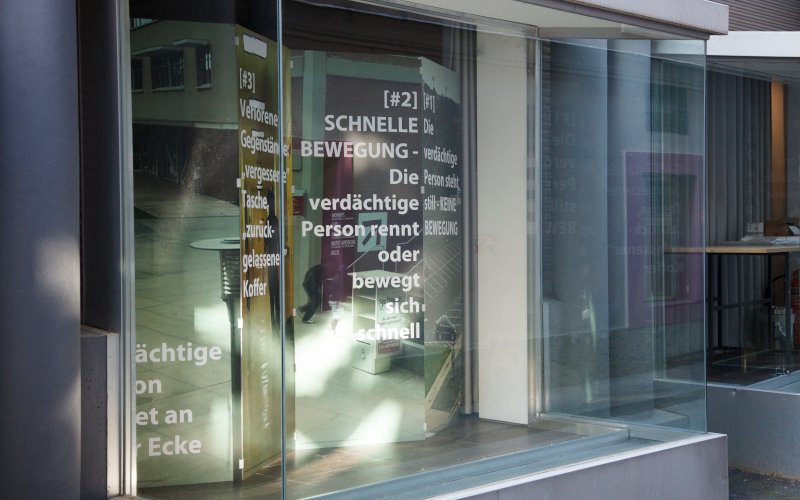

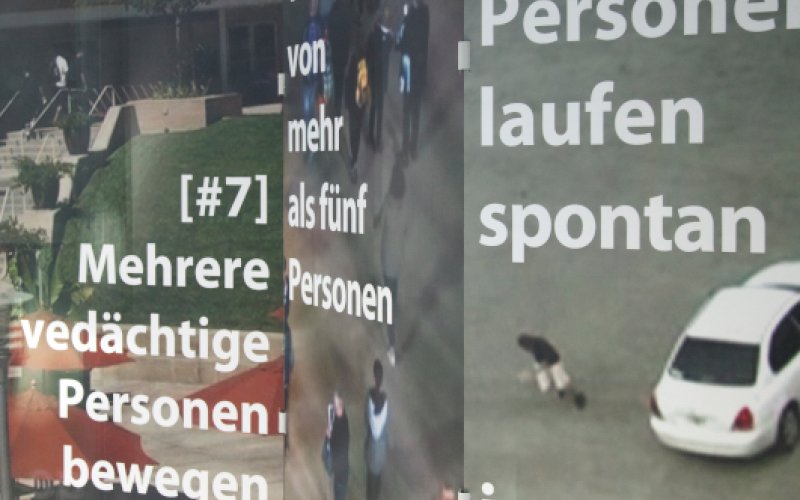

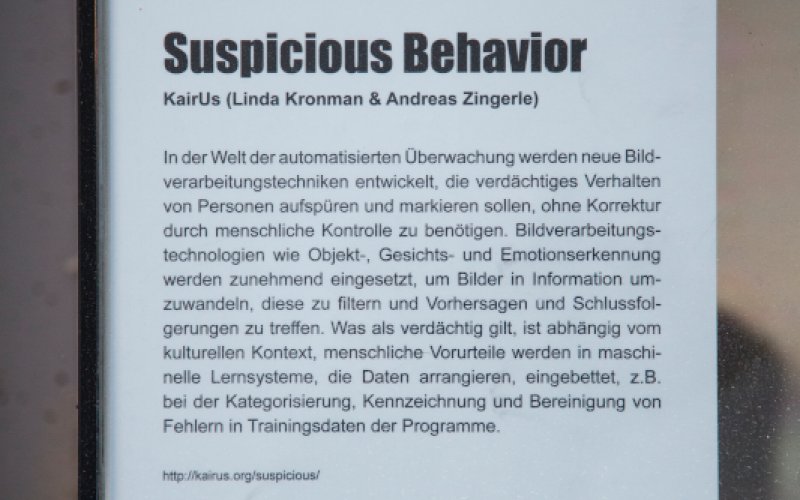

Suspicious Behavior

Video:

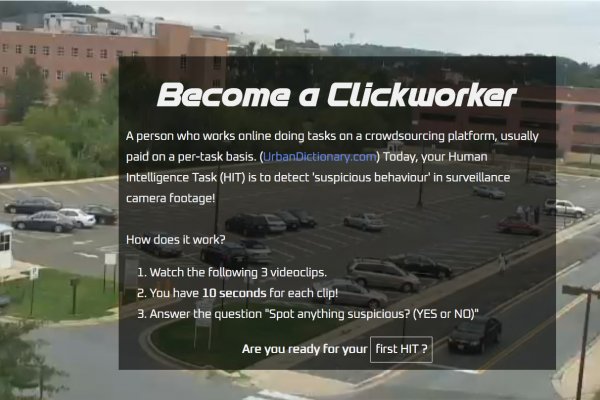

Become a clickworker yourself!

Machine vision technologies, such as object recognition, facial recognition and emotion detection, are increasingly used to turn images into information, filter them and make predictions and inferences. In the past years these technologies have made rapid advances in accuracy. The reasons for current developments are: the revival of neural networks enabling machine learning from observing data, access to massive amounts of data to train neural networks, and increased processing power. This seemingly logically causes excitement among innovators who are implementing these technologies.

In the world of automated surveillance new machine vision techniques are developed to spot suspicious behaviour without human supervision. As in all pattern recognition applications the desire is to translate images into behavioural data. Multibillion-dollar investments in object detection technologies assume that it is easy for computers to extract meaning out of images and render our bodies into biometric code, yet trial and error approaches have already revealed that machine learning is far from objective and emphasises existing biases. Human biases are embedded in machine learning systems throughout the process of assembling a dataset e.g. in categorising, labelling and cleaning training data. What is considered suspicious in one cultural context might be normal in another, hence, developers admit, “it’s challenging to match that information to 'suspicious' behavior”.

Nevertheless, the surveillance industry is developing "smart" cameras to detect abnormal behaviour to detect and prevent unsought activities. Moreover the current covid-19 pandemic has accelerated the development of computer vision applications, tracing new forms of dubious behaviour.

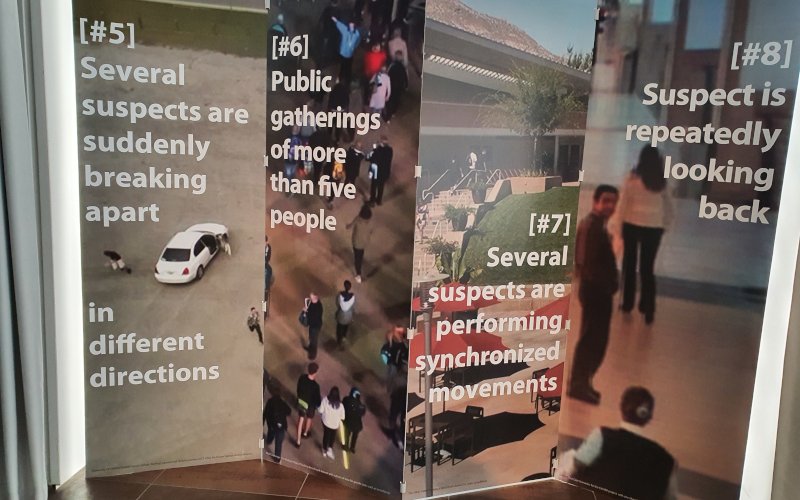

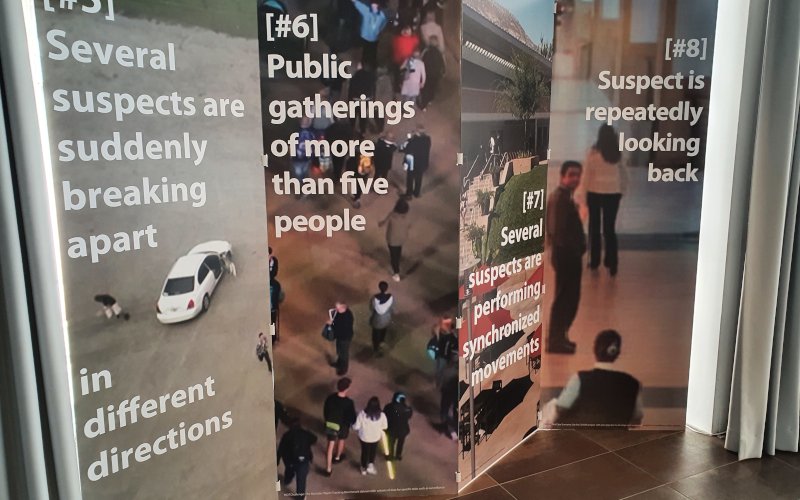

In the artwork "Suspicious Behaviour" the visitor enters the world of hidden human labour, which builds the foundation of how 'intelligent' computer vision systems interpret our actions. Through a home office set-up and an image labelling tutorial the the visitor traverses into experiencing the tedious work of outsourced annotators. In an interactive tutorial for a fictional company the user is motivated and instructed to take on the task of labelling suspicious behaviour. The video clips in the tutorial are taken from various open machine learning datasets for surveillance and action detection. Gradually the tutorial reveals how complex human behaviour is reduced into banal categories of anomalous and normal behaviour. The guidelines of what is considered suspicious behaviour illustrated on a poster series and disciplined in the tutorial exercises are collected from lists of varied authorities. As the user is given a limited time to perform various labelling tasks the artwork provokes to reflect upon how easily biases and prejudices are embedded into machine vision. "Suspicious Behaviour" asks if training machines to understand human behaviour is actually as much about programming human behaviour? What role does the 'collective intelligence' of micro tasking annotators play in shaping how machines detect behaviour? And in which ways are the world views of developers embedded in the process of meaning making as they frame the annotation tasks?

Link:

Kooperation:

Kind Support by Bergen Kommune